In this tutorial I want to talk about the pros and cons of queueing or buffering messages on an MQTT network.

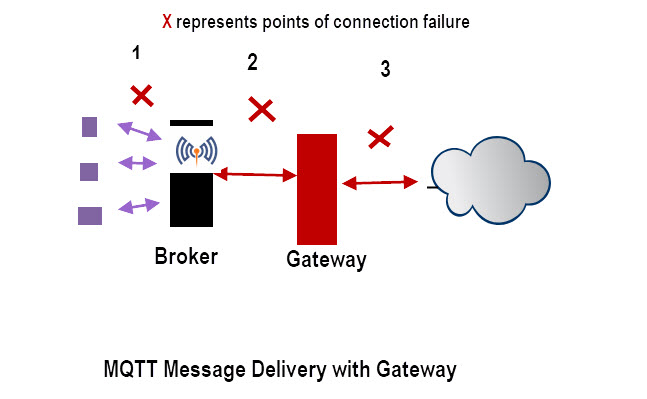

I want to start by discussing points of connection failure and the affects of failure by using the reference diagram below:

In the above configuration we have sensors connecting to a local MQTT broker and a Gateway/router responsible for sending MQTT data to the cloud for storage/processing.

If we look at the diagram then you can see three points of connection failure as well as three points of equipment failure (sensor,broker,gateway).

In this tutorial we will focus on connection failures.

Detecting a Network Failure

A network failure can be detected almost immediately in some circumstances, but usually relies on the keep alive mechanism which is 60 secs by default.

So a network failure should definitely be detected in 60*1.5 =90secs.

If we want it shorter then we decrease the keep alive interval or use a different detection mechanism outside of MQTT e.g a network ping.

Once we are aware of a network failure we then need to decide on a course of action which generally boils down to do we hold (buffer) messages or not.

Message Buffering or Queueing

MQTT provides 3 QOS levels which can be used to guarantee message delivery .

Although using QOS of 1 or 2 will guarantee message delivery in the case of a short break in the network, what happens for an extended break or a break with a high message throughput?

Buffering can occur on the client and the broker.

If the network connection fails between a client and broker then the client needs to buffer outgoing messages until it is restored.

Message buffering is done in memory and most clients will buffer or queue messages by default.

The client will usually have a setting that governs buffering, and the python client and node-red client defaults to unlimited.

That doesn’t mean that all messages will be queued as queueing will eventually fail as the memory is consumed.

When this buffer is full the client has no option but to overwrite existing messages or discard new messages.

You should be aware that small clients implemented on sensors will have very little buffering capability.

Although buffering messages using a QOS of 1 would seem the most logical thing to do you need to be aware of the consequences.

Problems with Buffering Messages?

If the network is down for a very short time (seconds not minutes) then holding messages isn’t really a problem as they will be sent with a short delay.

However if it is down for minutes or hours does the application receiving the data really need to receive those messages or can it safely skip them?

As an example lets assume we have 100 sensors sending temperature measurements every second to a broker and the broker sends messages to the cloud.

Now if we have a network break to the cloud of 5mins then we will have to hold 100*5*60 =30000 messages.

Once restored we then need to send and process 30000 messages on the receiver.

These messages would have priority over new incoming messages meaning there would be even more of a delay before the application was receiving up to date data, and not historical data.

The decision on whether or not to buffer messages cannot be taken without regard to the applications that are consuming those messages.

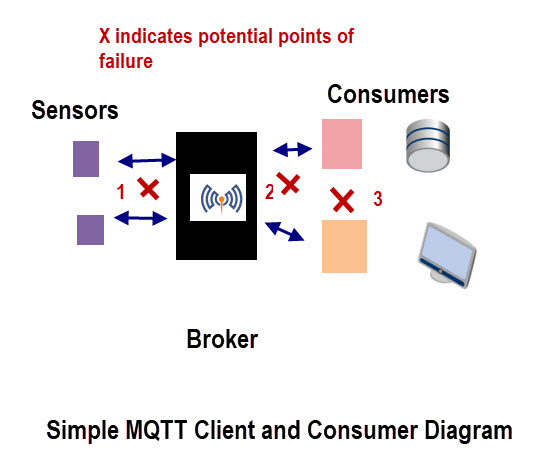

MQTT Consumers

With MQTT you can have multiple consumers of the sensor data, and so if you take an example of a data logging consumer and an alarm dashboard then clearly the logger would require historical data whereas the alarm dashboard doesn’t.

This is shown in the diagram below, and if we assume that the sensors are MQTT clients that can buffer data:

Therefore not sending all messages will impact the data logging but not the alarm dashboard.

So even though MQTT allows multiple consumers of the data it also means that you need to take this in mind when publishing the data.

In the simple diagram above the MQTT broker is capable of buffering data and so probably are the sensors however in both case buffering will be very limited and only suitable for small outages.

In the above diagram a failure of the network at point 2 would mean that the broker needs to buffer messages.

The default for mosquitto is 1000 messages.

Control Buffering Using QOS

It is possible to control message buffering using QOS levels on either the publisher or subscriber.

As an example consider a sensor publishing with a QOS of 1.

If the data logger subscribes with a qos of 1 then the client broker will buffer messages if needed.

If the Alarm client subscribes with a QOS of 0 then node messages will be buffered by the broker for this client.

Network Failure and Client Behaviour

A client sending with QOS of 1 or 2 will detect it immediately as it doesn’t receive a PUBACK.

Not receiving a PUBACK means that the message is queued, but a connection failure isn’t logged.

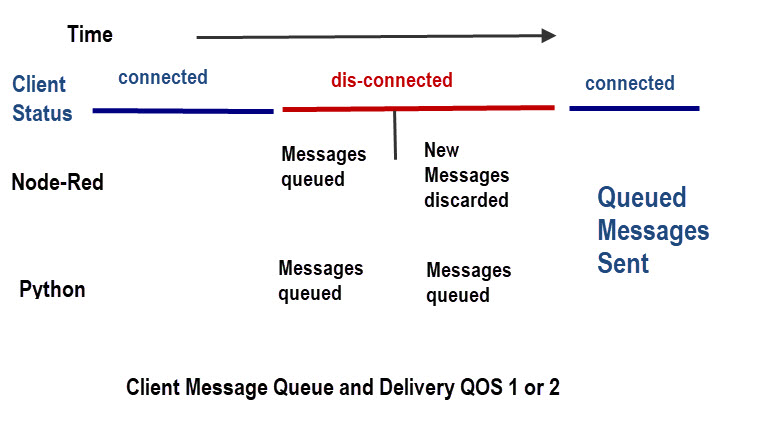

Clients will work differently and so it is important to be aware of how your client reacts to a connection failure. The diagram below illustrates this.

With a Python client the client queues messages for transmission even after it has detected a connection failure.

With node-red the client queues messages until it detects the connection failure after which it discards new incoming messages.

This means that with node-red we need to add an extra queue if we want to keep all messages until the connection is re-established.

Video- MQTT Message Buffer using Node-Red

Flow Used in Video

Summary

MQTT applications/consumers have different data requirements and this you must consider when deciding whether or not to buffer MQTT messages, especially over a long period.

Simply setting the QOS to 1 on all connections is usually not a suitable choice.

Personally I would use 0 by default and 1 when really needed and be aware of the potential problems.

Discussion

This tutorial attempted to give an overview of potential problems arising from using QOS of 1 and queuing message for guaranteed delivery. As such I would welcome feedback based on your own experience.

Related Tutorials

Hi, Steve what is the Maximum queue Size since my broker is holding a message of maximum 120 .

How to increase the Queue size?

Hi

Take a look here

http://www.steves-internet-guide.com/mqtt-broker-message-restrictions/

rgds

Steve

Hello Steve

This issue of buffing took me a whole week to debug, I had a tracker publishing telemetry location data every 3 second, on the event of network failure, it would que old data and discard new ones, the implications is that someone tracking would not see the location in real time….so I solved it by setting qos to 0….but am wondering if there is a better solution, because I noticed that with qos of zero, sometimes the location data doesn’t reach the receiver and the car appears to be static again, and that’s what I don’t want

What client are you using? Did you notice that it detected a network failure?

rgds

steve

Hi Steve,

Is there a limit to the buffer.length size?

Will the data still persist after a power and network outage or does this only work for network outage?

It depends how it is stored. Most just use memory which is limited and you will lose it when the power goes off. However you could save the messages to a file or database so that the number of messages is practically unlimited and you don’t lose them in case of a power failure.

Hi Steves

Thank you article http://www.steves-internet-guide.com/mqtt-client-message-queue-delivery/

We have 4 crititical application running on Premise doing Request and Response to another 4000 application running at Traffic controller at traffic intersections .

Can you please let us if we use Request and Response message from MQTT 5.x, will MQTT Broker guarantee message delivery even if the MQTT Broker is very busy with over million messages been publish.

Also do know if there plain for MQTT to supports message priority.

Thank you for your help and support

Regards, Bao Quach

In response to the first question I don’t know as it all depends on your hardware and network setup.

I am not on the mqtt standardds body but I don’t expect any more changes to the protocol.

Rgds

Steve