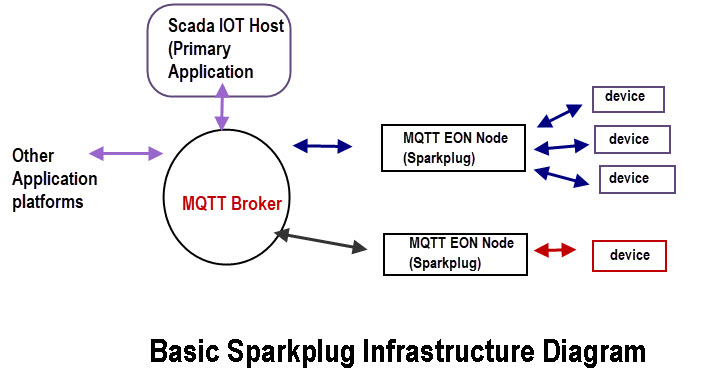

In a Sparkplug MQTT network there is no direct link between end nodes and the primary application (control node).

All communication between nodes is via a central MQTT server.

In this tutorial we will look at the message payloads and how the various components establish a session with the MQTT broker and what they publish.

A diagram of a Sparkplug network is shown below for reference.

Sparkplug Payload Basics

The Sparkplug payload uses Google Protocol buffers which is a way of representing complex data as a string and functions in a similar way to JSON.

Google Protocol buffersare more complex than JSON, but that complexity is taken care of in libraries which are available for all major languages.

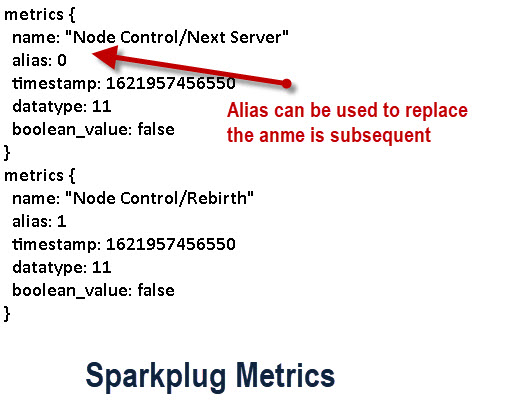

A Sparkplug payload contains a series of metrics (readings). The metric has a:

- Name

- Alias

- Time stamp

- Data type

- Value

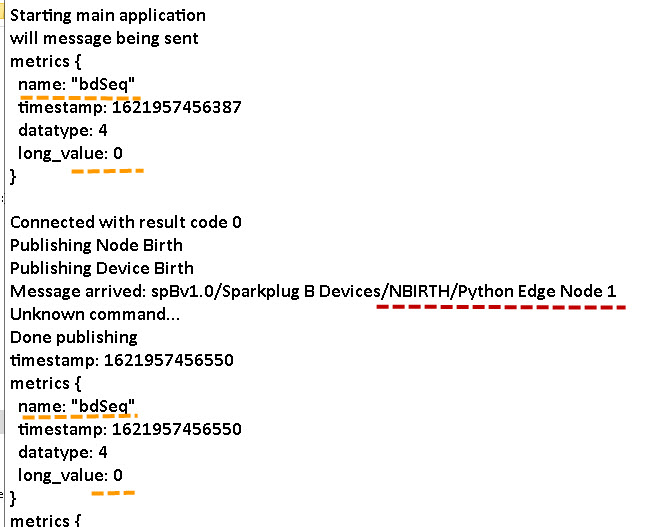

Below is an example taken from a EON birth message (NBIRTH)

Note: The alias published in the metric can be used in place of the name in subsequent messages.

All messages published have a sequence number starting at 0 and ending at 255 after which it is reset to 0.

All messages include a time stamp for the message and also a time stamp for each metric in the message.

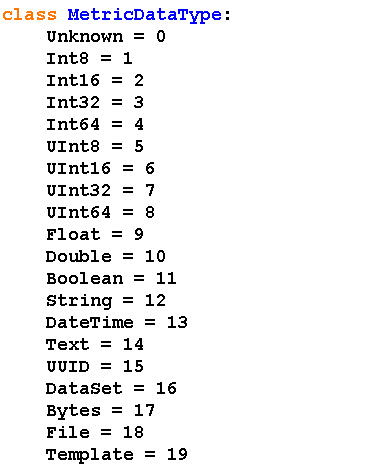

Data Types aren’t part of the Sparkplug B specification but are defined in the client libraries. A numeric code is assigned to the data type.Below shows the python client library data type declaration.

Sparkplug Message Basics

There are five basic message types:

- Birth

- Death

- Data

- Command

- State

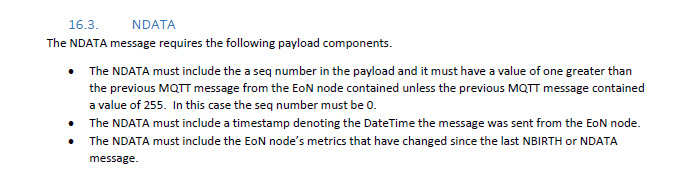

The content of these messages varies by message type. The required components of each message type are detailed in the section 16 of the specification.

Below is a screen shot taken from the specification for a NDATA message.

How Sparkplug Components Communicate

As shown in the diagram above we have several components in a Sparkplug system. There are:

- Primary application

- EON (Edge of Network) nodes

- Device Nodes

In this section we look at the messages and message payloads that are exchanged by these nodes.

Primary Application Session Establishment and Messages

When the control application comes online it publishes:

- Last Will message

- Birth Message

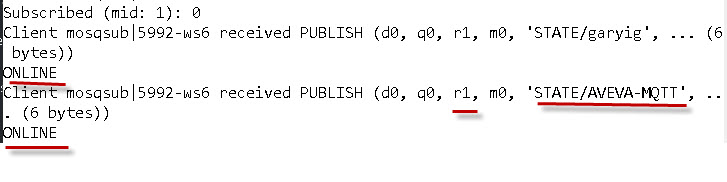

The topic used is STATE/scada_host_id and the payload format is a simple UTF-8 string and does not use the standard Google protocol buffers format used by other messages.

The last will message is sent as part of the MQTT connection and uses the topic STATE/scada_host_id and plain text payload of OFFLINE and the retain flag is set.

The birth message is sent to the topic STATE/scada_host_id and payload of ONLINE and the retain flag is set.

You can see this in the screen shot below captured using the mosquitto_sub tool:

Notice that the retained flag is set.

So the process for the primary application is

- Connect

- Publish State

- Subscribe to spBv1.0/#

It is now ready to receive messages and publish commands.

EON Node Session Establishment and Messages

When the EON (Edge of Network) node goes on line it does the following

- Connects to the MQTT broker and sets the Will and Testament message (NDEATH)

- Subscribes to the topics, NCMD and DCMD topics on spBv1.0/group/NCMD/myNodeName/# and spBv1.0/group/DCMD/myNodeName/#

- Publishes a birth message NBIRTH on the topic spBv1.0/group/NBIRTH/myNodeName

Note: the message payload for NDEATH and NBIRTH uses Google protocol buffers format.

Below is a screen shot showing the will message being sent and the Birth message from the EON node.

For the Will message (NDEATH) the only metric published is a sequence number(bdSeq).

This sequence number starts at 0 and is incremented on each connect.

The NBIRTH message also includes this same sequence number as shown in the screen shot above.

The NBIRTH message also includes all EON metrics that will be published by this Node and can be very large.

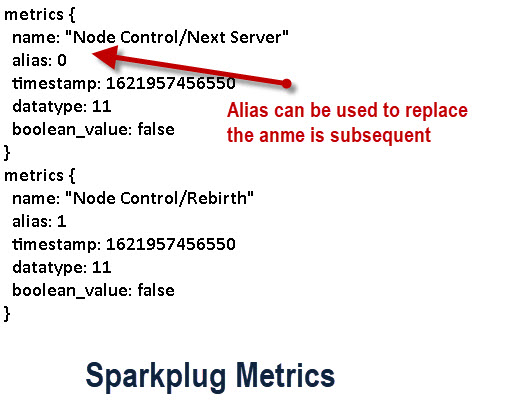

Also the NBIRTH message contains metrics that make public the commands that the EON node will accept as shown in the screen shot below:

If the control application publishes a message with the metric name Node Control/Next Server then the EON node will try to move to the next MQTT broker in its list.

MQTT Device Session Establishment and Messages

An MQTT sensor will function as an EON (Edge of Network) node.

It behaves as an EON + device Node.When it goes online it does the following

- Connects to the MQTT broker and sets the Will and Testament message (NDEATH)

- Subscribes to the topics, NCMD and DCMD topics on spBv1.0/group/NCMD/myNodeName/# and spBv1.0/group/DCMD/myNodeName/#

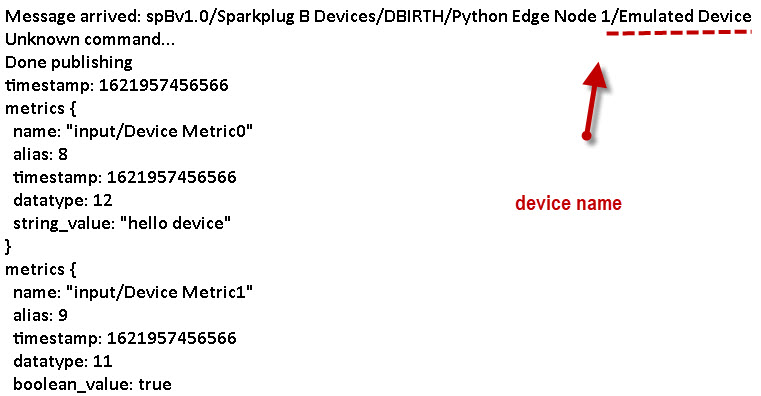

- Publishes a birth message DBIRTH on the topic spBv1.0/group/DBIRTH/myNodeName

An example DBIRTH Message is shown below.

None MQTT Devices I.E Legacy Scada Devices

Legacy Scada devices don’t support MQTT directly but instead will connect to a EON node probably using polling and report data to the EON device.

The EON node will publish data for the device using DDATA topic and receive commands for the device on the DCMD topic.

The EON node will publish DBIRTH and DDEATH messages for the connected devices.

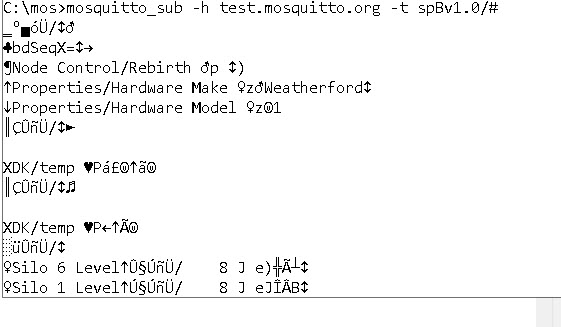

Using The MQTT Tools mosqutto_pub and mosqutto_sub with Sparkplug

Unfortunately these tools cannot be used with Sparkplug as they don’t understand the message payload as shown in the screen shot below:

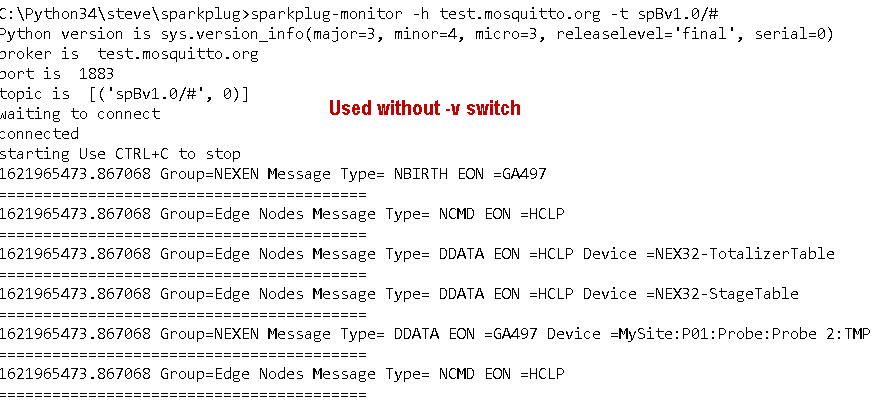

Python Sparkplug Message Monitor

This is an extension of the MQTT monitor I created a few years ago. It allows you to subscribe to a Sparkplug topic and displays data in a readable format.

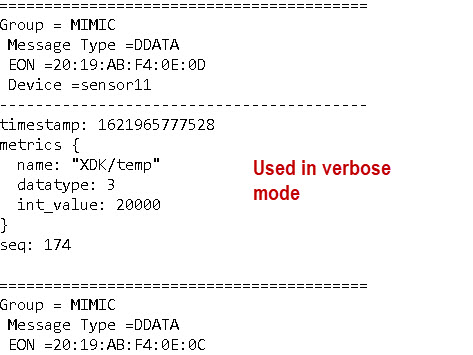

There switch -v will display topic and message and the default is to display topic only.

Used in verbose mode e.g.

sparkplug-monitor -h test.mosquitto.org -t spBv1.0/# -v

we get.

To work the monitor needs to decode the Google protocol buffers and this uses two files available on github but also included with the download. They are

- sparkplug_b.py

- sparkplug_b_pb2.py

I placed them in the same folder as the Sparkplug monitor file but they can go anywhere provided they are locatable by the module.

Summary

Sparkplug messages payloads use Google protocol buffers for encoding the message data.

The contents of messages depends on the message type and the each message type has mandatory fields that are detailed in the specification.

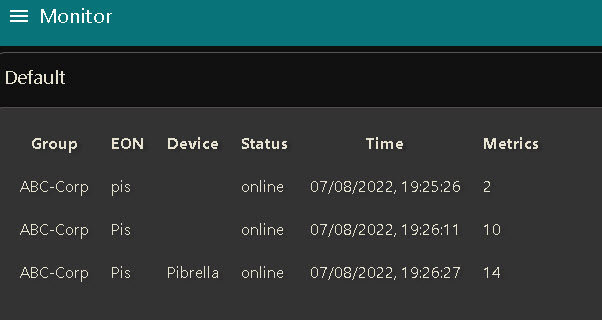

Sparkplug and Node-Red

I have a demo node-red Sparkplug monitor that you are free to try and feedback is appreciated.

Questions and Opinions

I like the topic structure and the birth messages but find Google protocol buffers complex when compared to JSON, but they may be required in certain use cases.

I can see a case for a hybrid model of Sparkplug type topic structure and JSON payloads.

Resources:

Related Tutorials

Hi Steve,

great articles, they often have helped me understanding technical details.

At the moment I am playing with SparkPlug B and I was wondering, is there a way to define the physical units for a variable I am transmitting with SparkPlug?

The specs say on page 44 that the payload has this format:

{

“timestamp”: ,

“metrics”: [{

“name”: ,

“alias”: ,

“timestamp”: ,

“dataType”: ,

“value”:

}],

“seq”:

}

so I don’t see any place for units, which is kind of shortcoming for me for such a “hyped” protocol.

Just seeing lots of buzz if SparkPlug or OPC-UA are better.

thanks

Hi

Never noticed that. I’m not sure how fixed the message data is I will have to check as it uses Google protocol buffers.

My personal preference is to use the topic structure but JSON message payload. They could have easily included this is the specification just using a different topic prefix spBv1.json for example.

I will try and do more research on it.

Rgds

Steve

On page 65 there is an example for a real world message where the unit is added in the name:

{

“timestamp”: 1486144502122,

“metrics”: [{

“name”: “Supply Voltage (V)”,

“timestamp”: 1486144502122,

“dataType”: “Float”,

“value”: 12.3

}],

“seq”: 2

}

this is imho a brutal bad move

Hi Steve, when the NDEATH is in the will message, wouldnt there be an ordering problem with publishes and the disconnect? Because sending of the LWT can happen before the MQTT broker handled the incoming publish messages.

so a subscriber might see messages in this order

NBIRTH,

NDATA,

NDATA,

NDEATH,

NDATA

and the publisher could have done this.

CONNECT(BIRTH, will(DEATH)),

PUBLISH(DATA),

PUBLISH(DATA),

PUBLISH(DATA),

DISCONNECT(sendWill)

The will message (NDEATH) is sent to the broker as part of the connect packet. The NBIRTH is a published message once connected.

THe NDEATH is sent by the broker but not on a normal disconnect only on network failure. If the client disconnects then it would need to send the NDEATH manually before disconnecting.

Rgds

Steve

Hi steve,

I’m using mosquitto as my client library to publish device data to Ignition Scada broker..However while connecting to broker I’m setting the LWM as sparkplug NDEATH payload, but in mqtt.fx the LWM is shown as “Failed to parse the payload”

Can we use google protobuf format for setting the LWM or it should be plain text ?

Can we set LWM as NDEATH or it should be only state topic according to sparkplug specification?

How state topic will look like, can you please give some information about state topic, how it will work and wht message it should contain and it should be in plain text or protobuf ?

Can you please provide your valuable inputs on above queries?

Thanks,

NANDHINI

All messages use Google probuffer with the exception of the state topic for the primary application which uses plain text.

The LWM message is used as NDEATH.

Most tools will not display the messages correctly because they use Google protocol buffers. There is a python script with the tutorial that you can download and it will display them

Rgds

Steve

Thanks steve, for the knowledge

Hi, steve

1) Is it only way that during connect to MQTT broker only we have to set will message and its topic or after connection establishment also we have set the will topic and message

2) Can we set more than one will topic and will payload or only one will payload/topic is allowed per connection

Please give your valuable feedback on above 2 points.

The will data is sent as part of the connection packet and is a single topic and payload.

Rgds

Steve

Thanks steve for the info

Rgds,

Nandhini

Hi steve,

In Sparkplug NBIRTH Payload, do we need to send the following metrics mandatory to any configured Client or user can define their own metrics…….If mandatory, can you please give some info abt these metric (why we need and how it will work)

1) “NodeControl/NextServer”

2) “NodeControl/Rebirth”

Please provide you valuable inputs..

You only send what is supported. If you don’t ave another broker then NextServer makes no sense however Rebirth does as it tells the node to republish.

Rgds

Steve

The Sparkplug messages monitored by sparkplug-monitor at the end of your article are

generated 24/7 by our Sparkplug lab at https://mqttlab.iotsim.io/sparkplug .

You can use the free lab to generate any tag with any value at any time to one of the

public brokers.

Hi Steve,

How to read signed integer value from metric. For example -32, it comes as 4294967264 while reading I have an option to read integer is int_value() which return unsigned int. Is there any other method to read signed integers.

Regards,

Rahul

Hi

What language are you suing?

rgds

steve

C++

Sorry but I don’t know as I don’t program C++.

Rgds

Steve

No Problem. Thanks for response.

The SParkplug protocol does work with mosquitto, you just have to modify the code that publishes the payload to publish a string version of the payload rather than the string serialized byte array of the payload.

With sparkplug specification if we use shared subscription. for example 2 client connected and subscribed with shared topic to broker and if i try to refresh the broker the DBIRTH packet is only send to client which is connected first to broker another client is not getting DBIRTH packets.

Is this the actual use case?

DBIRTH packets will not shared between clients?

only DDATA is shared between the clients using shared subscription?

Can someone please provide any answer or official documentation about this use-case..

Shared subscriptions is for load sharing and uses special topics starting with $share so I’m surprised by this are the clients subscribed to this topic and why do they need to see it?

Would like to speak to you about some quick consulting on how to incorporate SparkPlug w our product. Please drop me an email and we can discuss. I can be reached at +1 561 302-3395, a text messaging indicating who you are would be useful.

Thanks

Steve you are THE man! Thanks for all of your posts!!

Hi, thanks for your article. It certainly clears some fog around the topic for me.

I’m considering selecting Sparkplug over some proprietary topic and payload spec, but I was a bit surprised to see no schema validation (using the NBIRTH payload as schema). Is it just up for the client and host application developers to implement this where needed? Or is work done in that area already?

Also, could you recommend a method to -within the spec- include metric units and bounds into the payload? This could be useful for SCADA ui building. For instance a temperature sensor reporting in Celsius with a range of -50 to +50. You could have metrics “min” “max” and “unit” in the NBIRTH but there’s also the “properties” attribute of “metric”.

Hi

Although I like the Sparkplug idea I do find the payload structure complex. You might want to look at using JSON encoded data for the payload.

Difficult to say more without more knowledge of what you data looks like.

Rgds

Steve

Thank for your response. I thought the whole point of Sparkplug was to promote device interoperability on the MQTT level. In that context I shouldn’t be knowing what my data looks like, a Sparkplug NBIRTH message should tell me, right? If I’d just swap out the protobuf payload for JSON, I wouldn’t be complying to the standard.

Agree but does your application fit well with the standard? It really all depends on how standards compliant you want to be.

There is not doubt that there are many systems out there already that have their own unique topic and payload design standardisation becomes important when integrating with other systems if you will need to do that in the future then you should go with the standard.

Rgds

Steve

Hi Steve, I’ve just finished reading the sparkplub b specification document and I have not implemented it yet, but I’m confused a little, do we have manually to set the NBREATH and DDEATH Messages??

Yes the script you used needs to send them. I have added the example.py script to the download> take alook as it shows you how to do it.

Rgds

Steve

Hi Steve, would it be a bad idea or overkill to use Sparkplug B specification for IoT systems whose architecture is only Client + Broker + Server and doesn’t include any EoNs?

I think Sparkplug is probably overkill and complicated for most small to medium projects> in a large industrial setting it makes sense but for a few dozen nodes then no.

So in your case I would say forget it.